Fast, Efficient Messaging for Huddled

Fast, Efficient Messaging for Huddled

Goals

Before we start, lets Identify some performance goals we want to hit.

Performance Metrics & Goals

Current Performance

- Messages load time: 3-5 seconds per subgroup

- Messages per page: 20 (limited by performance)

- Server calls per message: 3+ (basic info, reactions, decryption, other)

- Client-side processing time: 1-2 seconds for message transformation

Target Metrics

- Message (single) render time: < 80 milliseconds per subgroup

- Messages per page: 42 (matching Slack's optimal number)

- Server calls per message: 1 (consolidated data structure)

- Client-side processing time: < 200ms

Industry Benchmarks

- Slack: 42 messages loaded within 1 second

- Discord: Seamless scrolling with minimal latency

- Industry standard: < 2 second load time for content visibility

Current Limitations

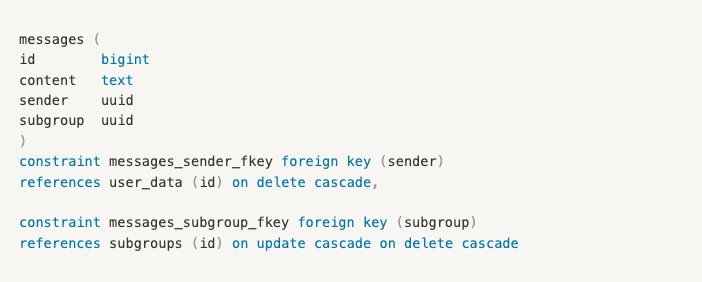

As of right now, messages for subgroups are stored in the messages table. This table holds references to the sender and the subgroup it was sent in. A basic overview of the table is like this:

This allows us the basic data we need access to, such as who sent the message, where it was sent, and the actual message itself. When it comes to reactions, they exist on a normalised table message_reactions and look like:

When we retrieve messages, what we end up doing is loading all the basic information, then for each message, we do another call to get the reactions associated to it. This, as you can imagine, is extremely inefficient and is very slow to do for every message thats been loaded.

On top of that, each message is originally sent to the client encrypted, and another call needs to be made to get the decrypted value for each message.

And then we have embeds, media, files etc. which add another layer of loading. All together, the time it takes to load a single subgroup with just a few messages is between 3-5 seconds.

We mitigate some loading pains by paginating the messages. each time messages are loaded it fetches 20 records. The reason this is 20 is because any more than this and the time it takes to perform these transformations mentioned above become too slow. Another thing we do is prefetch messages when a user hovers over a relevant link to another chat. The issues with these 2 optimisations however is that even with them, the time-to-load is still horrible, and on top of that, mobile devices don’t really get access to these optimisations as most actions happen on click/tap and with paginating messages, the whole process of getting data over multiple calls, transforming them, etc is done client side. On devices like mobiles, with potentially lower hardware bandwidth, this makes things really slow.

Comparison: Slack

The approach Slack uses to messaging is quite direct as their platform is a messaging first platform. Their approach focuses on efficient message loading and predictive prefetching to provide a better user experience, choosing to hide/mask the loading behind smart tricks.

Key takeaways

Slack has an interesting ways of solving these issues that they discuss on their blogs. the key points they seem to follow when handling messages are:

- Do less up front.

- Don’t load all channels’ unread messages right away.

- Be really lazy.

- Don’t fetch more than needed for channels with unreads. Prefer mentions and avoid prefetching too much, to keep the client model lightweight.

- Prepare in the background.

- New messages received can present an opportunity to prefetch history.

- Be one step ahead of the user.

- Use “frecency” and user actions to inform and prefetch the user’s next move.

How can we use these in Huddled?

Currently our chat is the bare minimum, as such the first 2 points don’t really make too much sense right now but that doesn't mean we can’t take anything away from them.

One of the things they mentioned is their magical number 42 for thew amount of messages to load per page. I think their justification was sound and we should also target being able to load that amount of messages within a second of the user action being made.

That being said, the main points I was to explore are the last two. Prefetching seems to be a very powerful tool. As it is now, we’re only doing it on hover, but there are times when we want to do it optimistically. This includes when the user receives a message, or when they are added to a subgroup. The reasoning behind this is, on a new message or joining a new subgroup, they’d likely try to open or view that chat history, having that conversation ready for them will be a huge boost.

Slack also has their way of predicting which channel you’ll open, from their blog:

Not only do we know which channels to pre-fetch, we also learn which channels you view the most often (frequency), and most recently (recency) — hence, “frecency”.

This means they can effectively load conversations they think you will open before you actually open them. We could implement a similar system by tracking conversation visits at a minimum, even breaking it down by time of day would make it better.

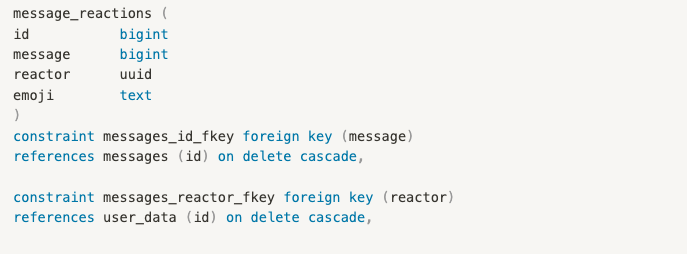

The last thing i want to touch on, which Slack covers in their second part to their dev blog is caching. They mention using local storage for their previous caching methods and ran into lots of issues with size, corruption, etc. Although they do not specify what their solution was by name, we can infer it was either indexedDB or some sort of in memory cache solution.

We could also do an in-memory solution with Redis or preferably Valkey or another open source alternative, this however means implementing and maintaining a version of this in our application.

The second option was to use IndexedDB to cache messages which would worked on a client rended application but we use SSR and so it would be too much overhead to manage syncing the caches between the browser and the server.

Given the 2 options it would be better to use an in memory cache instead. My plan will be to use a Map() to test it out before deploying a full solution like Valkey.

A quick thought for implementation would be something like:

This can then be used in the browser or in the server.

Comparison: Discord

Discord doesn’t really talk much about their messaging strategy but looking into their desktop app we can make assumptions on what they’re doing. The other consideration I looked towards Discord for was how they render their messages, the rich input, reactions, files, etc.

Key takeaways

- Structure is Key

- Keep data well-structured and understandable.

- Keep memory to a minimum

- Make sure to limit things to only what you need.

- Latency can be simulated.

- Sending a user's message before it’s actually been sent could make the experience more fluid for the end user.

- Save as much data as possible.

- Saving as much data as you can about embeds, files, images, etc helps when you need to render something quickly.

How can we use these in Huddled?

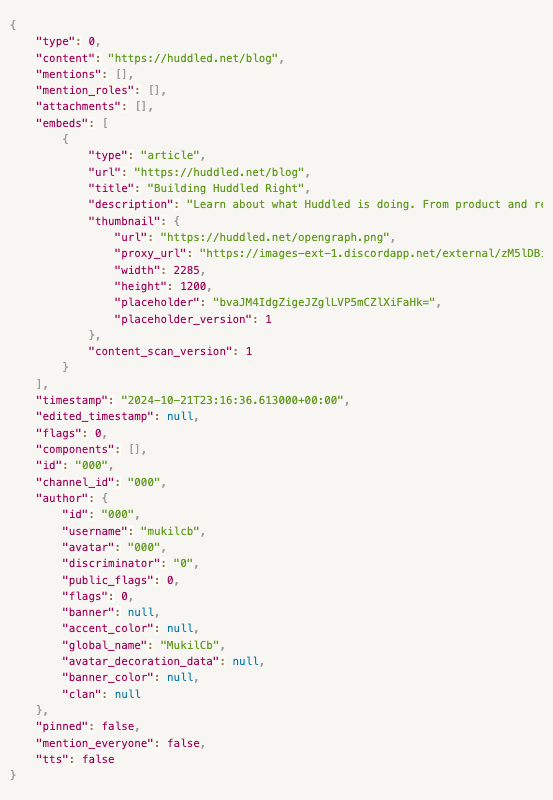

I really like the way Discord approaches its message structure. Currently, our message structure is restrictive and not open to growth. Here is an example of a discord message object:

This message object contains everything we need to know about the message itself, while also making it easy to extend. For example, the type field is 0 but for a reply it would be 19 , keeping the types system open means adding different types of messages is easy and can be simply added, removed or modified. The way they store all the embed data is also helpful to quickly render link previews using OpenGraph data instead of how we do it now which is slow and synchronous.

Another thing Discord does is when paginating messages, Discord uses pagination as a range, instead of appending to a list. this allows them to keep the memory of stored messages at a minimum, loading newer or older messages when needed, or pulling them from cache. The way they get these messages is based on the timestamp:

messages?before=1297819087765770250&limit=50

This is used instead of pages and offsets as now you can just load all messages before the final message time in the array.

What now?

Now that we have this insight, we need to consider implementation. The first step would be to identify resources.

Development Resources

- Time

This might well be a full overhaul of the messaging system.

Infrastructure Needs

💽 The following assumes at scale values of:

-

Maximum concurrent active conversations: 5,000

-

Total stored conversations: 4,000

-

Maximum concurrent users: ~25,000 </aside>

-

Cache server requirements at scale (5000):

- Memory: 8GB minimum for production

- Storage: 50GB SSD for message cache

- Network: High-bandwidth, low-latency connection

-

Additional server capacity for prefetching system

-

Monitoring and logging infrastructure

Third-party Dependencies

- Redis or Valkey or other for caching

- Performance monitoring tools

- Load testing infrastructure

Success Strategy

Success Criteria

- Message (total) load time < 1 second for 42 messages

- Message (single) render time < 100 milliseconds

- Cache hit rate > 80%

- Mobile performance matching desktop

- User satisfaction metrics improvement

Other Notes/Takeaways

Realtime

One thing to be weary about is the way realtime is currently done. The supabase realtime connection is slow, and can get quickly get expensive. Eventually, like Slack and Discord, we will have to move to our own api server and host websocket connections. I estimate the scale at which we would need to do that is roughly around 1,000 concurrent realtime connections.

Security

The Cache for the messages store needs to be securely hosted and refuse connections from unauthorised connections as it will store sensitive data.

Time

A conversation to have about out timeline and what exactly we are doing. Are we at a stage where we can move slower and a bit more relaxed. If yes, this project should be done at the developers pace and the entire team needs to understand how it works, whether that be doing learning modules or explanation meetings. Shortcuts should be used as a last resort.

If not, this project should not be done at all.

Ending Notes

The proposed changes are expected to reduce message load times by a lot and significantly improve user experience, as well as bring us into line with more common messaging applications.

I don’t really have much else to say, if you’ve made it this far, here is a cat